The Neon App, Which Paid for Recording Calls, Leaked User Conversations and Shut Down

At the end of September 2025, Neon, an app that paid users to record their phone calls and sold the data to AI companies, briefly surged to the second spot on the Apple App Store. But its rapid rise ended just as quickly when a serious vulnerability was discovered that exposed users’ phone numbers, call recordings, and conversation transcripts to anyone online.

An App That Paid for Your Voice

According to the official website of Neon Mobile, the company paid users 30 cents per minute for calls made to other Neon users and up to $30 per day for calls to external numbers. It also offered referral bonuses for attracting new participants.

The business model was straightforward but controversial: sell the recorded calls to AI companies to help them train, test, and refine conversational models.

Data from Appfigures shows that on September 24, 2025, Neon was downloaded more than 75,000 times in a single day, making it one of the top five apps in the Social Networking category on the U.S. App Store.

A Simple Test Revealed a Critical Flaw

As reported by TechCrunch, the app was soon taken offline, and its return remains uncertain.

The vulnerability was discovered by TechCrunch journalists during a brief test. The issue: Neon’s servers did not properly restrict user access, allowing authenticated users to retrieve data from other accounts.

To verify this, the journalists created a new account on a separate iPhone, verified their phone number, and analyzed Neon’s network traffic using Burp Suite, a common security testing tool.

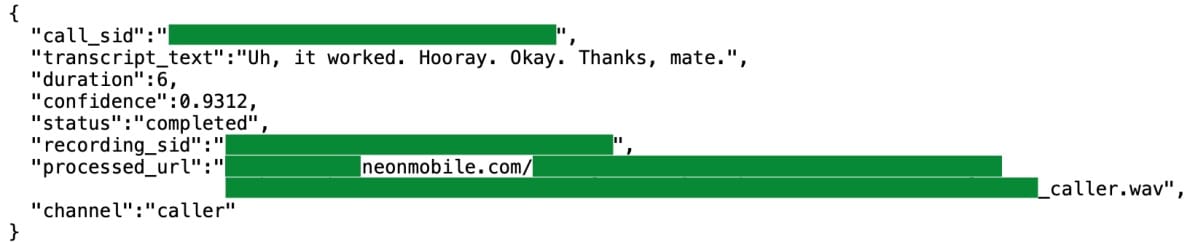

After making several test calls, the app displayed a list of recent calls and the corresponding earnings. But in the captured traffic, the reporters found text transcripts of the conversations and direct URLs to the audio recordings, both of which could be accessed simply by opening the links in a browser.

A screenshot published by TechCrunch showed part of a transcript between two of its journalists, confirming that Neon’s recording and transcription features were fully functional — and dangerously exposed.

The Breach Went Beyond Individual Accounts

Worse still, the researchers found that Neon’s servers allowed access to other users’ recordings and transcripts. In multiple cases, they were able to retrieve recent call data, including links to audio files and text transcripts.

Each record contained sensitive metadata: the phone numbers of both parties, call duration, timestamps, and earnings. According to TechCrunch, many users appeared to be calling real people — possibly without their consent — in order to earn money through the app.

Developer Response

The journalists disclosed their findings to Neon's founder, Alex Kiam, who had previously not responded to press inquiries. Following the disclosure, Kiam shut down the app’s servers and began notifying users that the app had been temporarily suspended.

However, the message sent to users did not mention the data exposure or the discovered vulnerability.

“Your data privacy is our top priority. We want to ensure it is fully protected even during this period of active growth. Therefore, we are temporarily disabling the app to add additional layers of security,” the notification stated.

Neon’s developers declined to answer whether the app had undergone a security review before launch. It also remains unclear whether the company retains logs or technical evidence to determine if others exploited the flaw or stole user data.

What Happens Next

TechCrunch reports that it is still unknown when — or if — Neon will return. App store moderators have not yet commented on whether the app violated Apple’s or Google’s developer policies, and both companies declined to respond to TechCrunch’s requests for comment.

The Neon case highlights a growing tension in the AI data economy — where the race for training data can outpace security, privacy, and even legality.