Slop Is Served! An Editor’s Column

A new phrase has joined the digital dictionary: “AI slop.” It’s the label people slap on the sloppy, off-putting results of neural networks the distorted hands, the uncanny smiles, the soulless text. The word has become shorthand for everything people hate about generative AI.

But since slop seems unavoidable, maybe it’s worth asking what comes after it?

The Fear of the Slopocalypse

The worry is easy to find. Even the creators of Kurzgesagt, long admired for their science videos, recently voiced concern that AI-generated junk will flood the internet. Their fear: when everything looks convincing but is riddled with errors, it’ll be impossible to tell truth from fabrication.

And indeed, the wave is coming. After text and images, AI-generated video is now rolling in. TikTok is filling with synthetic reels, and fully generated video platforms are already in testing. No one really asked for this but that’s never stopped tech companies before.

Old Fears, New Faces

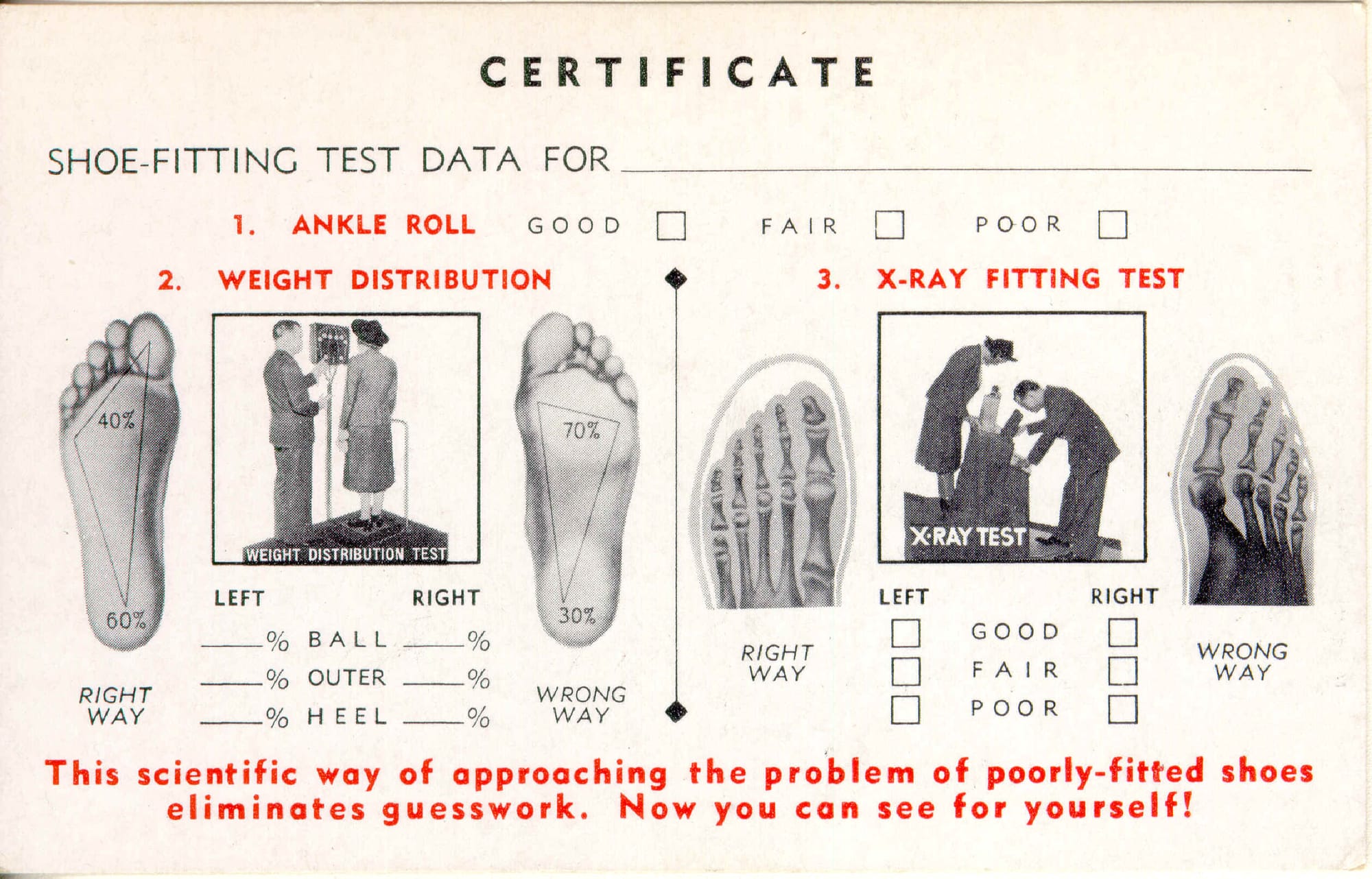

Every major innovation comes with its moral panic. Alvin Toffler called it future shock: when technology outpaces our ability to process it.

Back in the day, electric light bulbs were accused of ruining sleep and sanity. Early X-ray machines were used to “scan” feet in shoe stores, giving real burns to the clerks operating them. Humanity has always stumbled a bit before learning to use its inventions safely.

AI slop spreads faster, yes but at least it won’t give you radiation poisoning.

The Real Threat Isn’t Slop

The problem isn’t that AI is producing nonsense; it’s that soon it won’t be. The true danger lies in plausibility. Hyper-realistic synthetic media can fabricate events, bend reality, and manipulate public opinion.

This isn’t new either fake news already showed how easily truth can be gamed. AI just scales it.

That’s why the real challenge isn’t stopping slop, but verifying authenticity.

A Glimpse at Solutions

Platforms are experimenting. On X (formerly Twitter), Community Notes let users collaboratively fact-check misleading posts. The system relies on a clever “bridging” algorithm: if people from opposing viewpoints both flag something as false, it probably is.

It’s far from perfect but it’s a start. The same principle could one day underpin AI content verification systems, using consensus to counter manipulation.

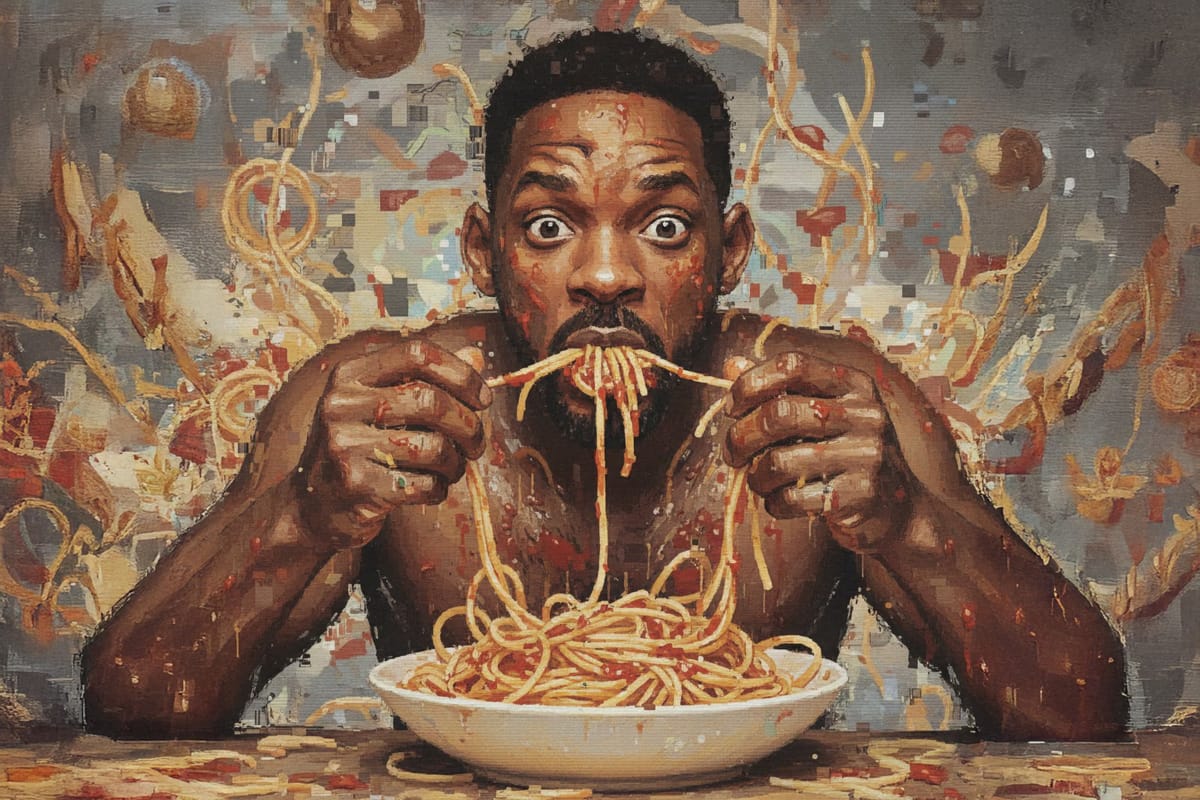

The Comedy of Errors

For now, the “slop” itself is harmless and often hilarious. Generated videos where faces warp, limbs vanish, and eyes look just a bit too alive fall squarely into the uncanny valley. People aren’t outraged by their realism — they’re repulsed by their weirdness.

And that’s fine. Save those clips. They’ll make great historical artifacts.

The first viral slop video — Will Smith eating spaghetti — appeared in March 2023. Less than three years later, AI can recreate that scene indistinguishable from a Hollywood production.

AI still messes up, apologizes, and messes up again — but not for long.

When the Problem Becomes the Solution

How many years before the models become no dumber than the average internet user? Maybe we won’t even need elaborate authenticity systems.

At this rate, the slop will refine itself — and the mess will end up cleaning its own table.