The Whistler Who Hacked the World: The Joseph Engressia Story

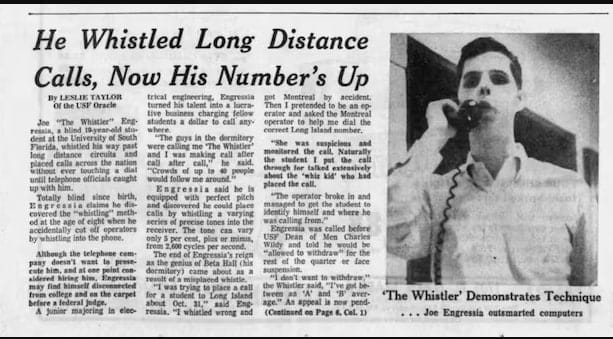

Joseph Engressia could make free phone calls to anywhere on Earth with nothing but his voice. No tools, no equipment—just a whistle at precisely 2600 Hz. This is his story.

The Discovery

Engressia was born blind. Like many people with congenital blindness, he developed exceptional hearing and musical ability