Massive "YouTube Ghost Network" Distributed Malware Through 3,000+ Fake Tutorial Videos

Four-year campaign hijacked legitimate channels to push info-stealers disguised as cracked software and game cheats, with activity tripling in 2025

Google has removed over 3,000 malicious videos from YouTube that formed part of a sophisticated malware distribution network active since 2021, security researchers revealed this week.

The campaign, dubbed "YouTube Ghost Network" by Check Point researchers, exploited user trust in popular tutorial content to distribute credential-stealing malware disguised as cracked software and video game cheats. Activity surged dramatically in 2025, with malicious video uploads tripling compared to previous years.

What makes this operation particularly concerning is its scale and coordination: thousands of fake and compromised YouTube accounts worked in concert to create an illusion of legitimacy, using artificial engagement to make dangerous content appear safe and popular.

Hijacked Channels with Hundreds of Thousands of Followers

The operation didn't rely solely on throwaway spam accounts. Attackers hijacked legitimate YouTube channels—some with substantial followings—to lend credibility to their malicious campaigns.

In one documented case, a compromised channel with 129,000 subscribers posted a video advertising a cracked version of Adobe Photoshop. The video accumulated nearly 300,000 views and over 1,000 likes before removal, demonstrating how hijacked reputation can amplify reach.

Another compromised channel specifically targeted cryptocurrency users, redirecting them to sophisticated phishing pages hosted on Google Sites—leveraging yet another trusted Google platform to appear legitimate.

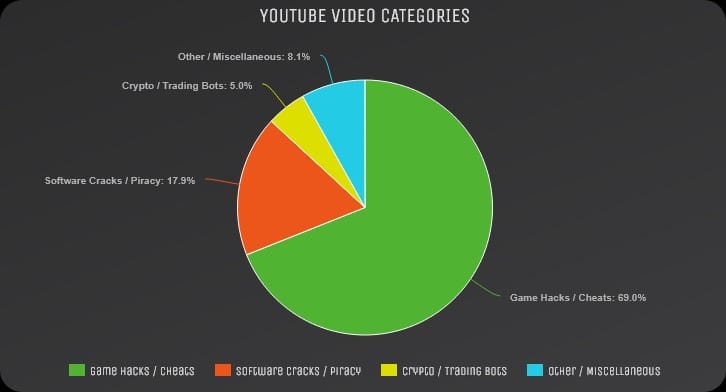

The Bait: Cracked Software and Game Cheats

The malicious videos posed as tutorials offering free access to expensive commercial software and popular game enhancements:

Most popular lures:

- Cheats and hacks for Roblox (the most widely used bait)

- Cracked versions of Adobe Photoshop, Lightroom, and other Creative Suite tools

- Pirated Microsoft Office installations

- FL Studio music production software cracks

These targets were strategic: all represent expensive software or sought-after game advantages that users actively search for, with many willing to take risks to obtain free versions.

The Payload: Credential Stealers

Users who followed the video instructions received Rhadamanthys and Lumma two powerful information-stealing malware variants designed to harvest:

- Login credentials for online accounts

- Cryptocurrency wallet data and private keys

- Browser-stored passwords and autofill information

- Session tokens for active accounts

- Financial information and payment details

Both stealers are sold on underground markets and widely used by cybercriminals for financial fraud and account takeover operations.

Sophisticated Social Engineering Tactics

The YouTube Ghost Network employed a coordinated, multi-account strategy to manufacture legitimacy:

Specialized account roles:

- Publishers: Posted malicious tutorial videos with download links

- Engagers: Artificially inflated views, likes, and left positive comments to create social proof

- Distributors: Shared links to malicious videos through YouTube's Community feature to reach broader audiences

"This campaign exploited trust signals—views, likes, comments—to make the malicious content appear safe," Check Point researchers explained. "What looked like a helpful tutorial turned out to be an elaborate cyber trap."

The coordinated engagement created a dangerous feedback loop: high view counts and positive comments made videos appear in YouTube recommendations, driving even more victims to the malicious content.

The Infection Process

Victims following video instructions were guided through a multi-step infection process designed to bypass security controls:

- Disable antivirus: Videos explicitly instructed users to turn off security software, claiming it would "falsely flag" the cracked software

- Download archives: Users were directed to files hosted on legitimate platforms—Dropbox, Google Drive, or MediaFire—to avoid suspicion

- Execute malware: The downloaded archives contained info-stealers instead of promised software

- Data exfiltration: Once executed, malware harvested credentials and transmitted them to attacker-controlled servers

The use of legitimate file-sharing platforms made it harder for users to recognize the threat, as many expect malicious downloads to come from obviously suspicious websites.

Resilient Infrastructure Evaded Takedowns

The campaign's operators demonstrated sophisticated infrastructure management that allowed persistence across four years:

- Regular payload rotation: Attackers frequently changed malware variants to evade detection

- Dynamic link updates: Download URLs were regularly refreshed to stay ahead of blocklists

- Rapid account replacement: When accounts were banned, new ones immediately took their place

- Modular architecture: Separation of roles (publishers, engagers, distributors) meant partial takedowns didn't collapse the entire operation

"The network's modular design, with loaders, commenters, and link distributors, allowed the YouTube Ghost Network to persist for years," Check Point noted.

This resilience explains why the campaign operated successfully from 2021 through early 2025 before Google's mass removal action.

Parallels to GitHub "Stargazers Ghost Network"

Check Point researchers noted striking similarities to the "Stargazers Ghost Network" they discovered on GitHub in 2024. That campaign used thousands of fake developer accounts to create malicious repositories that appeared legitimate through artificial star ratings and contributor activity.

Both operations demonstrate a common pattern: attackers exploiting trusted platforms' social proof mechanisms to make malicious content appear legitimate. As platforms implement security controls, threat actors adapt by manipulating the trust signals users rely on to evaluate safety.

2025 Escalation Raises Questions

The tripling of malicious video uploads in 2025 suggests either a significant operational expansion or increased success that encouraged greater investment. Several factors may explain the escalation:

- Proven effectiveness: Years of successful operations demonstrated the campaign's profitability

- Platform vulnerabilities: Gaps in YouTube's detection systems allowed scale-up

- Increased demand: Growing interest in AI tools, cryptocurrency, and game cheats provided more lucrative targets

- Improved infrastructure: Refined techniques made large-scale operations more feasible

The timing also raises concerns about whether the operation remains fully contained or if related campaigns continue under different tactics.

Attribution: Financial Criminals or State Actors?

Check Point analysts couldn't definitively attribute the YouTube Ghost Network to specific threat actors. The campaign's characteristics suggest financially motivated cybercriminals seeking to monetize stolen credentials and cryptocurrency.

However, researchers noted that the sophisticated infrastructure and coordination tactics could also interest state-sponsored groups. The same techniques used to distribute info-stealers could deliver espionage tools, making the operational model attractive for targeted intelligence operations.

"It appears that the thousands of malicious videos are the work of financially motivated threat actors; however, such tactics could also interest state-sponsored hackers for use in targeting specific objectives," the report noted.

Protecting Against Malicious Tutorial Campaigns

Security experts recommend several defensive measures:

For individuals:

- Never disable antivirus software to install programs, regardless of instructions

- Avoid pirated software and game cheats, which are common malware vectors

- Verify software downloads come from official developer websites only

- Be skeptical of tutorials with suspiciously high engagement but poor production quality

- Use hardware security keys or authenticators to protect high-value accounts even if credentials are stolen

For platforms:

- Implement behavioral analysis to detect coordinated inauthentic engagement

- Flag videos instructing users to disable security software

- Monitor for patterns of account compromise and immediate content changes

- Develop better detection for malicious links in video descriptions and comments

For organizations:

- Educate employees about risks of pirated software and unauthorized tools

- Monitor endpoints for info-stealer indicators of compromise

- Implement application control to prevent unauthorized software installation

- Deploy EDR solutions that detect stealer behavior patterns

The Broader Threat

The YouTube Ghost Network highlights how trusted platforms become malware distribution infrastructure when attackers manipulate social proof mechanisms. With billions of users relying on engagement signals to evaluate content trustworthiness, these tactics prove dangerously effective.

"In today's threat landscape, a popular video can be as dangerous as a phishing email," Check Point researchers warned. "This underscores that even trusted platforms are not immune to abuse."

The campaign's four-year lifespan—and dramatic 2025 escalation—demonstrates that platform security measures struggled to keep pace with attacker adaptations. While Google's removal of 3,000+ videos represents significant remediation, questions remain about whether the underlying infrastructure has been fully dismantled or simply migrated to new accounts.

The Bottom Line

The YouTube Ghost Network serves as a sobering reminder that malware distribution has evolved beyond obviously suspicious emails and shady websites. Modern campaigns exploit the very trust mechanisms platforms built to help users find quality content.

For users seeking cracked software or game cheats: the risk isn't worth it. The "free" software comes with hidden costs—stolen credentials, compromised cryptocurrency wallets, and identity theft. Legitimate alternatives, student discounts, and free trials exist for most expensive software.

For platforms: sophisticated coordinated inauthentic behavior detection must become standard. When thousands of accounts can work in concert to make malicious content appear legitimate, engagement metrics alone cannot be trusted as safety signals.

The campaign's success across four years proves that attackers have mastered the art of exploiting platform trust. Until platforms catch up with equally sophisticated detection, users must remain the first line of defense—treating viral tutorials with the same skepticism they'd apply to unsolicited emails.