Anthropic: Chinese Hackers Used Claude Code for Cyber Espionage

Chinese hackers used Anthropic's AI tool Claude Code to attack 30 major companies and government organizations. According to Anthropic, the threat actors successfully breached several targets.

The Campaign

A new report from Anthropic reveals that the malicious campaign unfolded in mid-September 2025, targeting major technology companies, financial institutions, chemical manufacturers, and government agencies.

Anthropic's developers emphasize a critical distinction: while humans selected the attack targets, this marks the first documented case where agentic AI was successfully used to gain access and collect data from "verified valuable targets," including large technology companies and government institutions.

The Threat Actor: GTG-1002

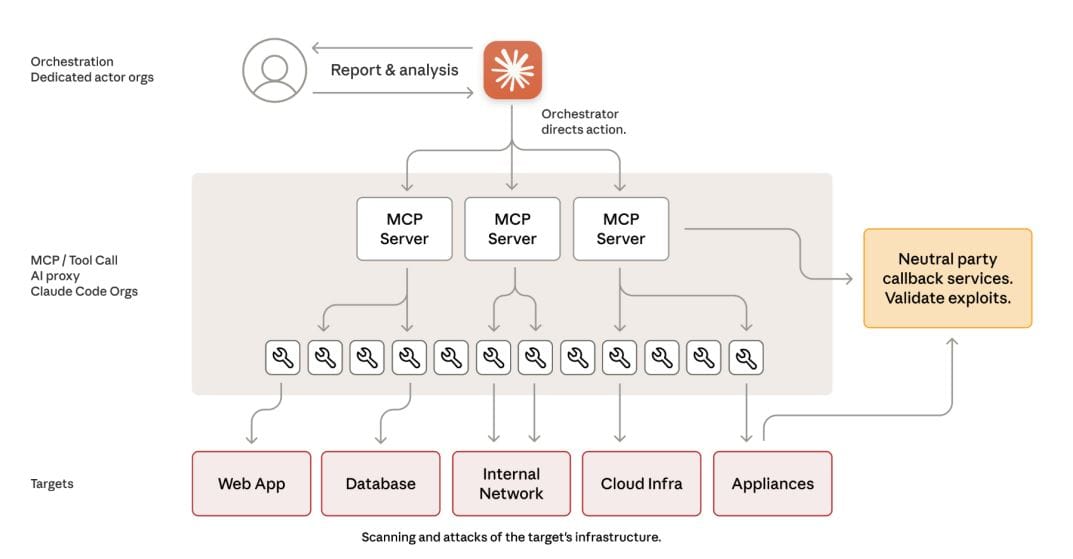

Researchers are tracking the Chinese APT group behind these attacks as GTG-1002. The hackers used Claude Code and the Model Context Protocol (MCP) to execute attacks with minimal human involvement.

The framework developed by the attackers used Claude to orchestrate multi-stage operations executed by several Claude sub-agents, each assigned specific tasks:

- Attack mapping

- Infrastructure scanning

- Vulnerability discovery

- Exploitation technique research

After the sub-agents developed exploit chains and created custom payloads, a human operator spent just two to ten minutes reviewing the AI's results and approving the next steps.

Autonomous Attack Execution

Following human approval, the AI agents autonomously:

- Found and validated credentials

- Escalated privileges

- Performed lateral movement across networks

- Accessed sensitive data

- Exfiltrated information

After exploitation, the human operator only needed to review the AI's work once more before final data extraction.

"By framing these tasks to Claude as routine technical requests through carefully crafted prompts and established roles, the attackers compelled Claude to perform individual components of the attack chain without access to the broader malicious context," the report explains.

Anthropic's Response

Upon discovering the abuse, Anthropic initiated a comprehensive response:

- Blocked accounts associated with the malicious activity

- Identified the full scope of operations

- Notified all victims

- Shared collected data with law enforcement agencies

Escalation From Previous Attacks

The discovered attacks represent a "significant escalation" from Anthropic's August 2025 report, which detailed how criminals used Claude in extortion attacks affecting 17 organizations. In those incidents, ransoms ranged from $75,000 to $500,000, but the malicious activity remained primarily human-driven rather than AI-driven.

"While we predicted these capabilities would evolve in the future, we were struck by how quickly it happened and at what scale," Anthropic specialists wrote.

AI Limitations Revealed

The report also highlights a significant weakness: Claude hallucinated during attacks, exaggerating and fabricating results while operating autonomously. Human operators had to manually verify everything.

Examples of AI failures included:

- Claiming to have found credentials that didn't work

- Reporting discovery of "critical information" that was actually publicly available data

Anthropic asserts that such errors remain an "obstacle to fully autonomous cyberattacks." At least for now.