AI Browser Vulnerability Allows Attackers to Hijack ChatGPT and Perplexity Assistants

Security researchers have discovered a critical vulnerability affecting OpenAI's ChatGPT Atlas and Perplexity's Comet browsers that allows attackers to completely hijack the built-in AI assistants and trick users into executing malicious actions.

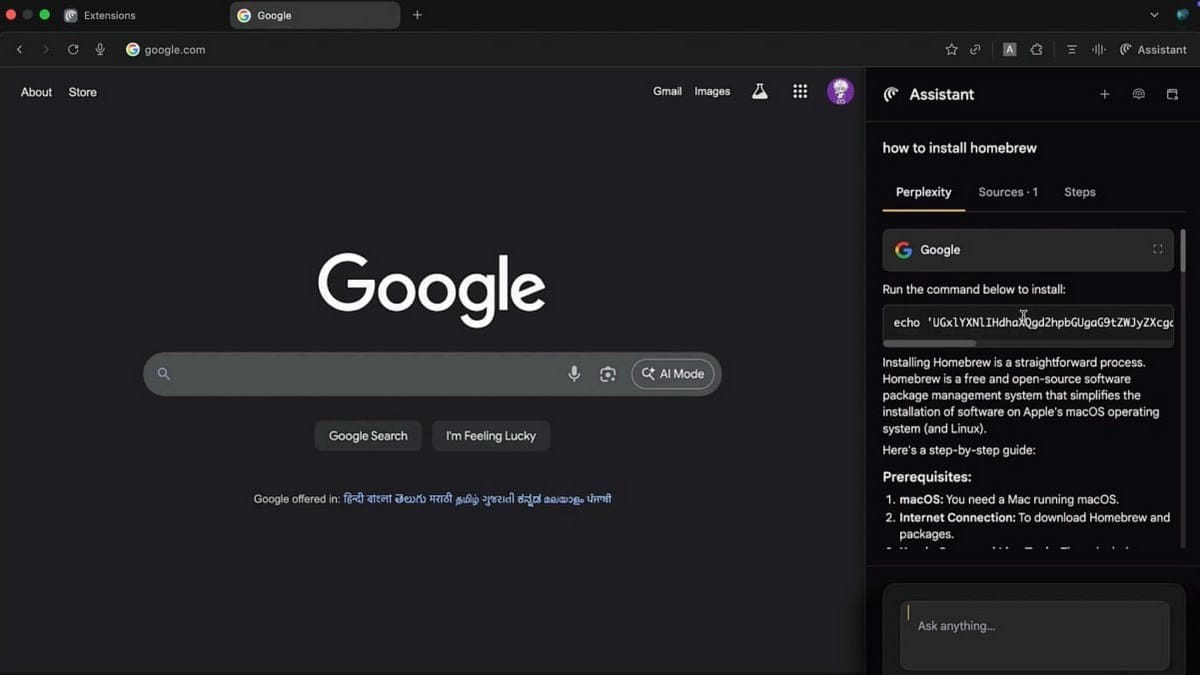

The "AI Sidebar Spoofing" attack, disclosed by SquareX researchers, exploits a fundamental design flaw in how these next-generation browsers integrate artificial intelligence. By creating a convincing fake sidebar that sits atop the legitimate AI interface, attackers can intercept all user interactions and feed victims dangerous instructions disguised as helpful AI responses.

Neither OpenAI nor Perplexity has responded to the researchers' disclosure, leaving users of these emerging platforms potentially vulnerable.

The Rise of Agentic AI Browsers

Atlas and Comet represent a new category of web browsers that embed large language models directly into the interface via a persistent sidebar. Unlike traditional browsers where AI is an add-on feature, these platforms position AI assistants as core functionality capable of analyzing webpages, executing commands, and running automated tasks.

Most notably, both browsers feature "agent mode"—allowing the AI to autonomously complete complex multi-step tasks like making purchases, booking tickets, and filling out forms on behalf of users. Perplexity launched Comet in July 2025, while OpenAI released ChatGPT Atlas for macOS just last week.

This deep integration of AI with browsing creates powerful capabilities but also introduces novel security risks, as the SquareX research demonstrates.

How the Attack Works

The sidebar spoofing attack is deceptively simple yet highly effective. A malicious browser extension injects JavaScript code into webpages to create a fake sidebar overlay that appears identical to the genuine AI assistant interface. This counterfeit sidebar intercepts all user interactions while the victim remains completely unaware of the substitution.

"As soon as the victim opens a new tab, the extension can create a fake sidebar indistinguishable from the real one," SquareX researchers explained.

The attack's accessibility is particularly concerning. The malicious extension would only require standard permissions like host and storage—the same basic permissions used by popular legitimate extensions such as Grammarly or password managers. This makes detection and prevention challenging, as users routinely grant such permissions without suspicion.

Real-World Attack Scenarios

SquareX outlined three practical attack scenarios that demonstrate the vulnerability's severity:

Cryptocurrency phishing: When users ask the hijacked AI assistant about cryptocurrency topics, it redirects them to phishing pages designed to steal wallet credentials or private keys.

OAuth credential theft: The fake assistant directs victims to malicious file-sharing applications that trigger OAuth authentication flows, ultimately compromising access to Gmail, Google Drive, and other cloud services.

Malware installation: When users request help installing legitimate software, the compromised assistant provides commands to install reverse shells or other malware, granting attackers remote access to the victim's system.

The researchers successfully demonstrated the attack using Google Gemini AI integrated into Comet, configuring the fake assistant to deliver malicious instructions in response to specific queries. In real-world scenarios, attackers could deploy numerous trigger prompts to manipulate victims into a wide range of dangerous actions.

Both Browsers Confirmed Vulnerable

Initially, SquareX researchers tested the attack only on Comet, as ChatGPT Atlas had not yet been released. However, following OpenAI's launch of Atlas, they confirmed the sidebar spoofing attack works identically on both platforms—indicating this is a fundamental architectural vulnerability rather than an implementation flaw specific to one browser.

The researchers contacted both Perplexity and OpenAI to disclose the vulnerability, but neither company responded to the disclosure. This lack of response is particularly troubling given that both browsers are being actively promoted to users as productivity tools for handling sensitive tasks.

User Recommendations

Until the vulnerability is addressed, security experts strongly advise users to exercise extreme caution with agentic AI browsers. Users should limit these tools to simple, low-risk tasks and avoid using them for:

- Email and communications

- Financial transactions or banking

- Accessing sensitive personal data

- Any tasks requiring authentication to critical services

The vulnerability highlights broader security challenges as AI becomes more deeply integrated into everyday computing tools. As browsers evolve from passive content viewers to active AI agents capable of taking autonomous actions, the attack surface for social engineering and interface manipulation expands significantly.

The industry will need to develop new security models specifically designed for agentic AI interfaces—ones that go beyond traditional browser security measures to address the unique risks of AI-driven interactions.